Farnoush Rezaei Jafari

I am a doctoral researcher in the Machine Learning / Intelligent Data analysis group at Technische Universität Berlin and BIFOLD (Berlin Institute for the Foundations of Learning and Data), working with Prof. Dr. Klaus-Robert Müller. My primary focus lies in enhancing the interpretability and efficiency of multi-modal LLMs, as well as vision and language models.

Before embarking on my Ph.D. journey, I accomplished my M.Sc. in Computer Science at TU Berlin in the year 2021. I did my Master’s thesis, titled “Analyzing the Importance of Temporal Information in 3D ConvNets for Human Action Recognition Through Explanations” under the supervision of Prof. Dr. Klaus-Robert Müller and Prof. Dr. Jürgen Gall.

news

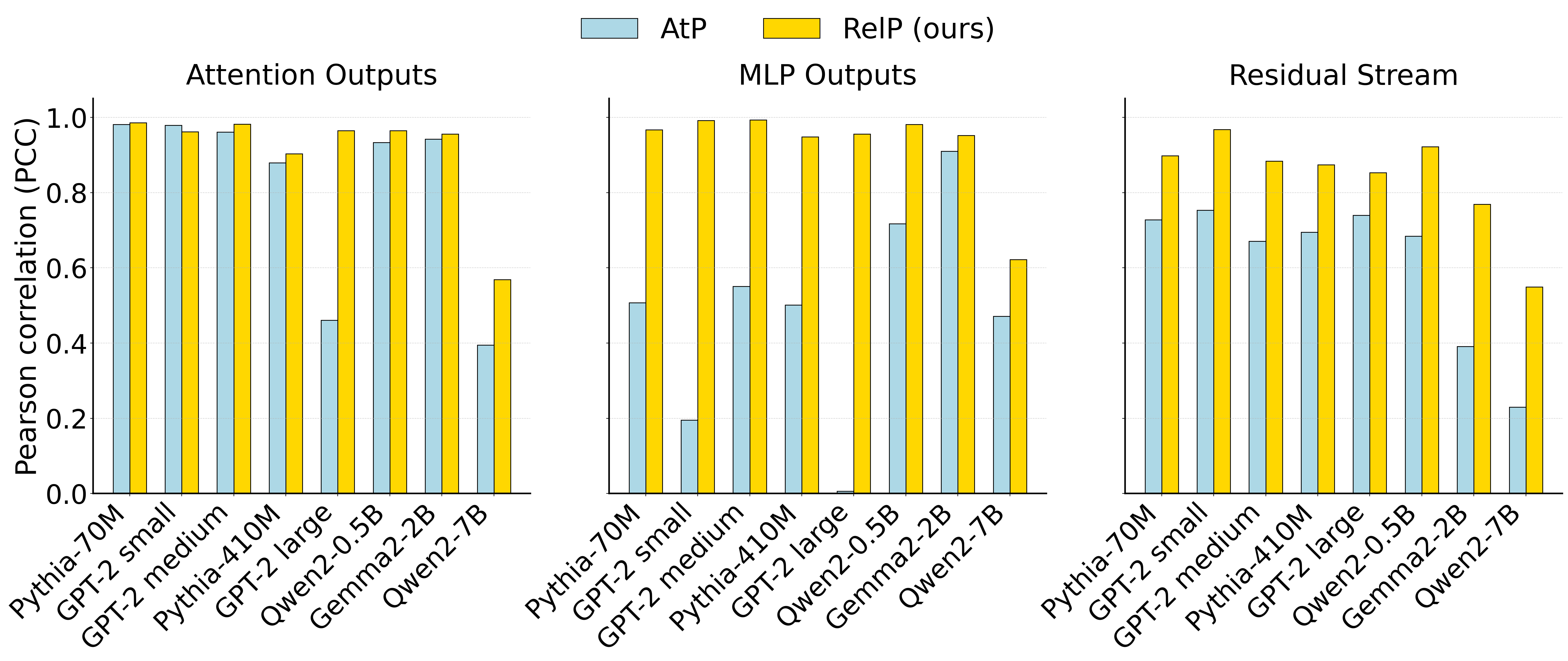

| Oct 22, 2025 | 🥳 Our paper, RelP accepted as a spotlight at Mechanistic Interpretability Workshop at NeurIPS 2025. |

|---|---|

| Mar 17, 2025 | 🎉 I have been accepted into the MATS 8.0 Training Program, led by Neel Nanda. |

| Jan 16, 2025 | 🎙️ Invited talk at Microsoft ASG about our NeurIPS 2024 paper, MambaLRP. |

| Jan 6, 2025 | 🥳 Our paper Towards Symbolic XAI has got accepted at Information Fusion. |

| Sep 27, 2024 | 🎉 Our paper, MambaLRP, has got accepted at NeurIPS 2024. |

| Aug 30, 2024 | 📢 Check out our new preprint Towards Symbolic XAI. |

| Jun 11, 2024 | 📢 Check out our new preprint on the interpretability of selective state space sequence models, called MambaLRP. |

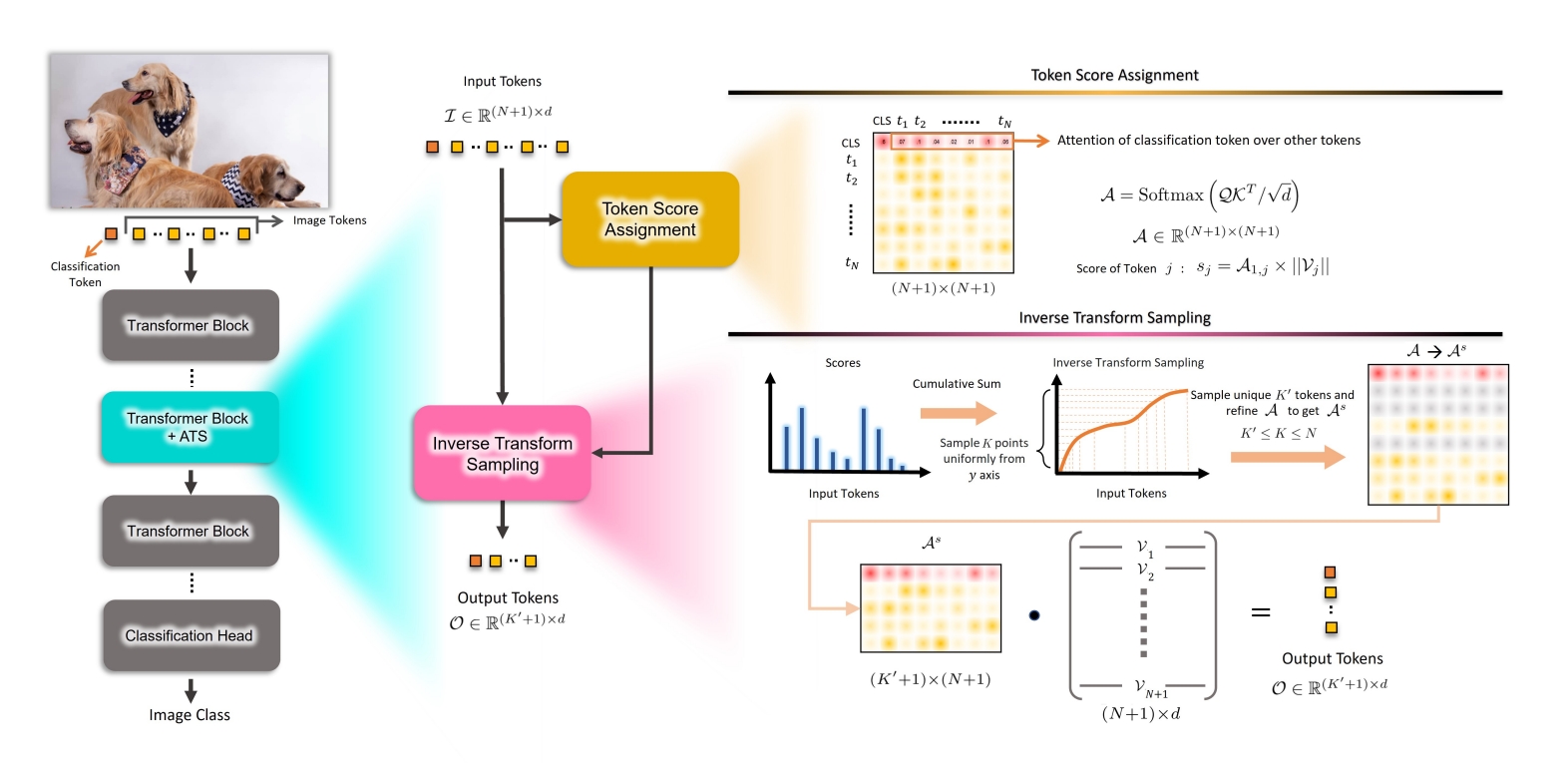

| Jul 3, 2022 | 🥳 Our paper, ATS is accepted at ECCV 2022 as an oral presentation. |

| Jun 14, 2022 | 🎙️ Presented our paper, ATS at Franco-German Workshop at Inria. |

| Nov 17, 2021 | 🚀 I started my PhD journey. |

Publications

- Information Fusion

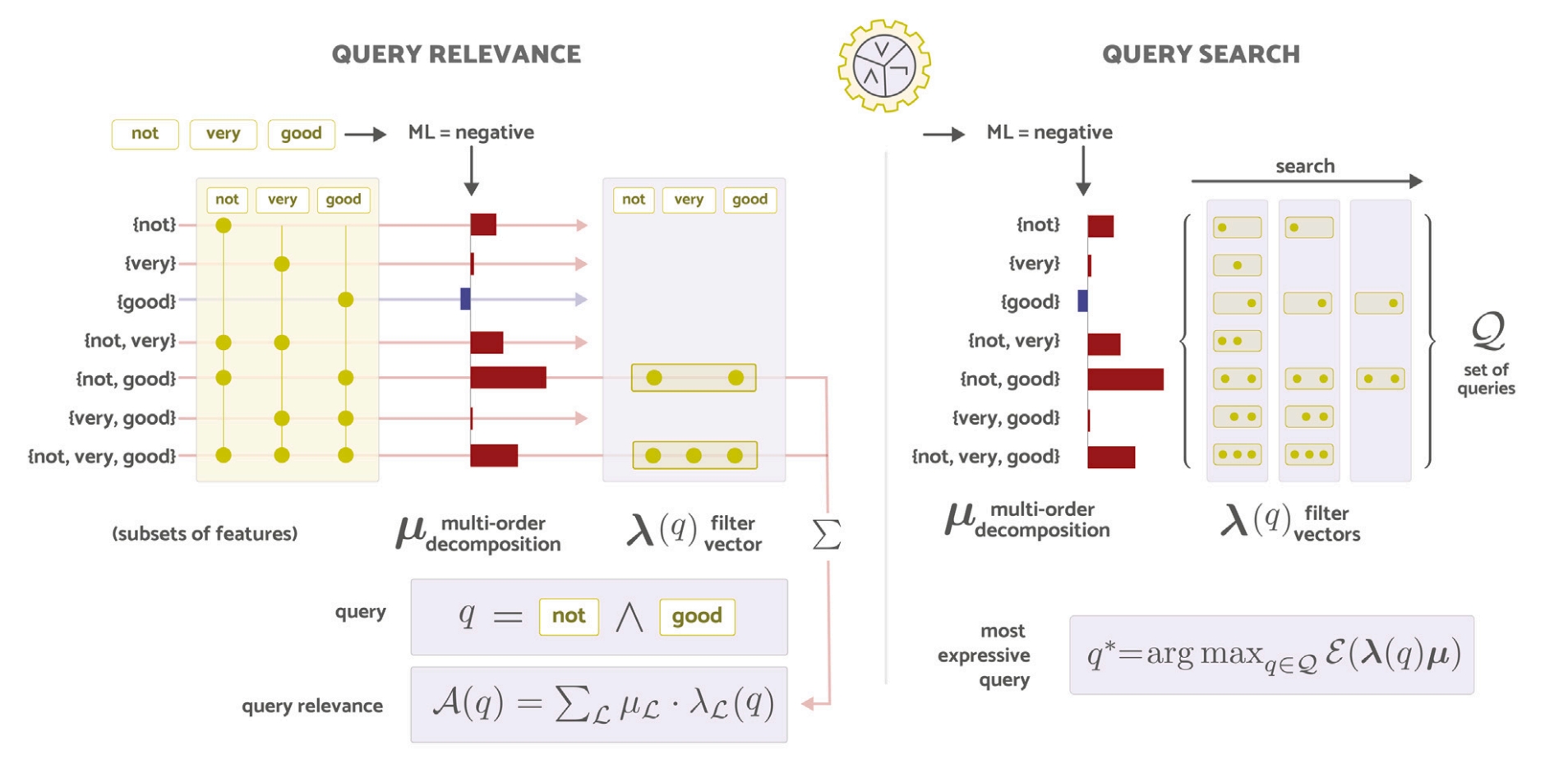

Towards Symbolic XAI – Explanation Through Human Understandable Logical Relationships Between Features

Information Fusion 2025Compositional Reasoning in LLMs & Vision Transformers; Subgraph-Level Model Analysis; Bridging Mechanistic Interpretability with Symbolic Reasoning; Logical Explanations for Transformers & GNNs

- NeurIPS

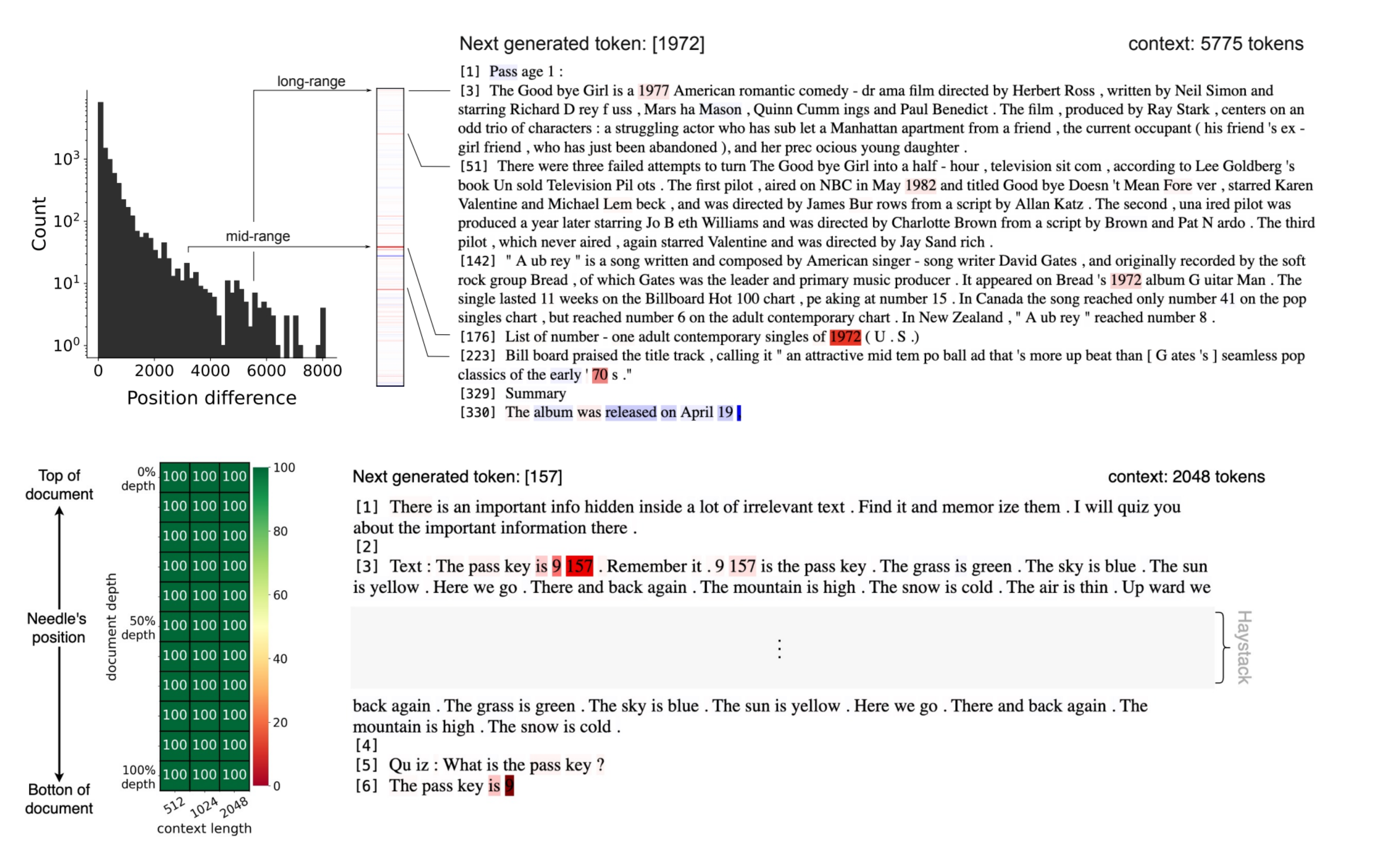

MambaLRP: Explaining Selective State Space Sequence Models

Conference on Neural Information Processing Systems (NeurIPS) 2024State-Space Models; Mamba LLMs; Vision Mamba; Interpretability; Identifying Model Biases; Analyzing Long-Range Dependencies; Introducing a Novel Evaluation Metric for Needle-in-a-Haystack